Memo Published February 28, 2024 · 4 minute read

Lethal Autonomous Weapons 101

Mike Sexton & Erik Carneal

The AI revolution is bringing unprecedented change to every facet of human life and society, and warfare is no exception. AI makes it easier to cheat on your homework and spin up fake news; it also makes it easier to discover life-saving medical treatments and craft legal defenses for the indigent. So what is going to happen with AI-enabled robotic weapons?

Takeaways

- Lethal autonomous weapons predate the computer era and are in use in offensive and defensive warfare throughout the world today.

- Incorporating autonomy into weapons can make them safer and more precise in the immediate term but adds new risk in the longer term.

- The next generation of autonomous weapons has a small but non-negligible risk of being hacked.

- A rogue AGI that causes widespread destruction is highly speculative, but too grave of a possibility to dismiss.

- The enemy gets a vote. International agreements to safeguard humans from autonomous weapons are necessary, but there are also rogue actors who could ignore them.

While “killer robots” sound newfangled, lethal autonomous weapons (LAWs) are not new. Many LAWs currently in use are decades old. Even mines—a very old weapon—are a lethal autonomous weapon.1

It may be counterintuitive, but because autonomy can improve not just the efficiency but the accuracy of weapons, some autonomy can actually make weapons more humane.2 Precision-guided munitions incorporate a degree of autonomy in order to be more accurate than a purely human-aimed “dumb” bomb, thereby saving civilian lives. So while it makes sense to be wary of automation in warfare, the reality of its impact is more complex than our intuitions would lead us to believe.

Our intuitive aversion to automated warfare is not baseless, though. Autonomous and smart weapons have a small but non-negligible chance of being hacked, and recent AI breakthroughs only make this easier. Worse yet, an unscrupulous military could hypothetically rely on such weapons to automate mass killings, circumventing its troops’ consciences.

Perhaps the most prominent threat, however, comes from artificial general intelligence (AGI)—a hypothetical future AI that is vastly smarter than humans.3 A rogue AGI—i.e., an AGI that has turned against humans—may be able to hack autonomous weapons at scale, stealing its own robotic military and turning it against humanity. While this is a highly speculative scenario, it is much too grave and plausible to dismiss.

However, it is important to remember the maxim that in war, the enemy gets a vote: LAWs are not developed in a vacuum, but to rival adversary LAWs and to overcome asymmetric threats like piracy and guerrilla-style fighting. For better or worse, near—and medium-term considerations—including humanitarian concerns—have contributed to the diffusion of lethal autonomous weapons.

To better understand whether advances in AI and weaponry will make warfare more or less humane, it is more useful to learn what lethal autonomous weapons there are than it is to hear what any one person thinks about them. For that reason, this product emphasizes exposition over analysis and policy recommendations.

It is hard to imagine any international rule that will conclusively resolve common sense humanitarian concerns with autonomous weapons. An intuitive requirement that every “autonomous” weapon must be fired manually will simply not work for missile defense systems like the Phalanx CIWS.4 The Iran Air Flight 655 disaster at the hands of a human-supervised Aegis combat system furthermore underscores that this human review would be no guarantee of better results.5

That does not mean policy progress on autonomous weapons is doomed, however. Incremental agreements—e.g., requiring certain precautions in urban areas, or limiting certain classes of autonomous weapons like turrets—are realistic and worth exploring. The key to achieving meaningful policy change with respect to lethal autonomous weapons will be striving for progress, not perfection.

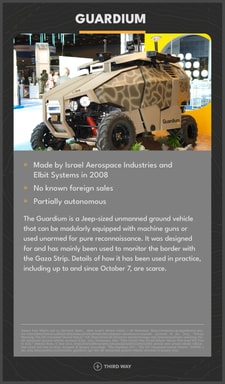

Lethal Autonomous Weapons

-

Rex MK II -

Aegis Weapon System -

Guardium -

Harpy drone -

Harop drone -

Phalanx Close in Weapon System (CIWS) -

KUB-BLA -

Shahed 136 -

Lancet -

Switchblade 300 -

Switchblade 600 -

Protector USV -

Sentry Tech -

SGR-A1 Turret