Report Published May 25, 2018 · 20 minute read

What Matters Most for College Completion? Academic Preparation is a Key of Success

Matthew M. Chingos

Executive Summary

A student’s academic preparation in high school is one of the strongest predictors of college degree attainment. Carefully designed research has found that students who take rigorous coursework and earn higher grades are more likely to score well on college entrance exams and be more prepared to succeed in college. Student-level characteristics, such as gender and race, also have important correlations with college completion rates, and while policymakers cannot influence a student’s gender or race, presumably they could influence the disparities in outcomes by gender and race. As Matthew Chingos notes in this report, one of the biggest areas that policymakers can affect is to ensure that more students are academically prepared for college at the time they arrive on campus.

There have been a handful of school-level initiatives that have proven to be effective in raising students’ academic readiness in high school. One carefully studied example found that students who took two algebra classes concurrently (rather than a single math class) had much higher high school graduation rates, college entrance exam scores, and college enrollment levels. Another study found that students who enrolled in more rigorous courses, after controlling for selection bias, also had higher high school graduation rates and college enrollment levels. Other factors such as teacher quality, school resources, and family environments also drive student learning, which situates students to be more successful in college. While scaling up any program is at risk of creating different results than originally intended, policymakers should look for ways to carefully replicate and study successful K–12 programs that enhance student readiness as part of a larger effort to increase postsecondary attainment.

In this report, Chingos reviews what is known about academic preparation and the largest determinants of college readiness. He then proposes a series of recommendations that policymakers might consider as they look to improve college completion rates.

— Rick Hess and Lanae Erickson Hatalsky

It is well-known that students with higher levels of academic preparation are more likely to enroll in and graduate from college. But discussions of college completion tend to focus on policies, such as financial aid, and institutional factors, such as student support services. This makes sense from the perspective of higher education policymaking, which can do little to change entering students’ characteristics beyond changing admissions practices to exclude less-prepared students. However, that would not increase completion overall and would make the system even more inequitable.

Colleges should continue to focus on how they can best serve the students they enroll, but that task would be easier if students arrive on campus better prepared to do college-level work. The goal of this paper is twofold: to provide a high-level overview of what we do and do not know about the student-level factors that predict college success and to discuss what the strong correlation between academic preparation and college completion does and does not mean for policy and practice.

Many factors affect college completion. Demographic characteristics, such as race, ethnicity, and socioeconomic status, consistently predict college enrollment and success rates. Troubling disparities between students of color and their white peers and among students from different socioeconomic backgrounds persist even after adjusting for differences in academic preparation.1

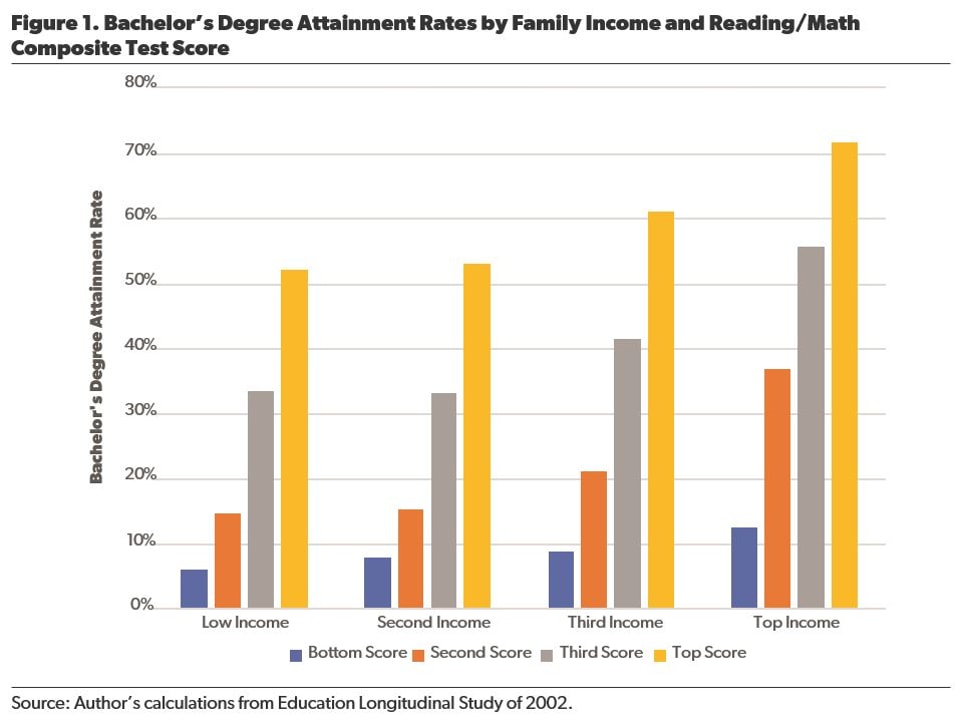

But academic preparation, including student ability, matters most, at least in terms of how strongly it predicts success in college. Figure 1, which is broken down by family income and standardized test scores, shows the rates at which a nationally representative sample of 10th-grade students attained bachelor’s degrees a decade later.

Students with the same family income grouping whose test performance was among the top quarter nationally were 45–59 percentage points more likely to earn a bachelor’s degree by 26 than were students in the bottom quarter of test scores. Differences in attainment rates among students with similar test scores but different incomes were smaller but still pronounced: 7–22 percentage point differences between the top and bottom income quartiles.

Academic performance in high school predicts not only bachelor’s degree attainment but also the rates at which students attain any postsecondary credential, including certificates and associate degrees. These rates are 34–39 percentage points higher for students who were among the top quarter of test takers than they are for students from the same income group but who were in the bottom quarter of scores.2 Once again, differences in attainment rates between the top and bottom income groups (among students with similar test scores) are not as large (15–21 percentage points).

Moving beyond the simplistic test score example above, I will first review the empirical evidence on how much various measures of academic preparation predict college success. Often-cited measures include college admissions scores; high school grades; courses taken, including Advanced Placement; and scores on other tests designed to measure “college readiness.” Noncognitive factors such as student motivation and grit are also surely important and are the focus of Mesmin Destin’s paper in this series.3

Second, I will provide a conceptual framework for considering how improving preparation might translate into improved success in college and increased degree attainment. I will discuss how much the predictive power of preparation can be used to infer the likely effects of interventions that improve academic preparation, as opposed to unmeasured student ability and family characteristics. This discussion is relevant to whether policy interventions should target particular preparation measures.

Measuring Academic Preparation

How do different measures of preparation predict college completion? Two commonly used measures to summarize students’ high school performance (including for admission to selective four-year colleges) are scores on the SAT or ACT college admissions tests and grade point average (GPA) at the end of high school.

Test Scores and Grades

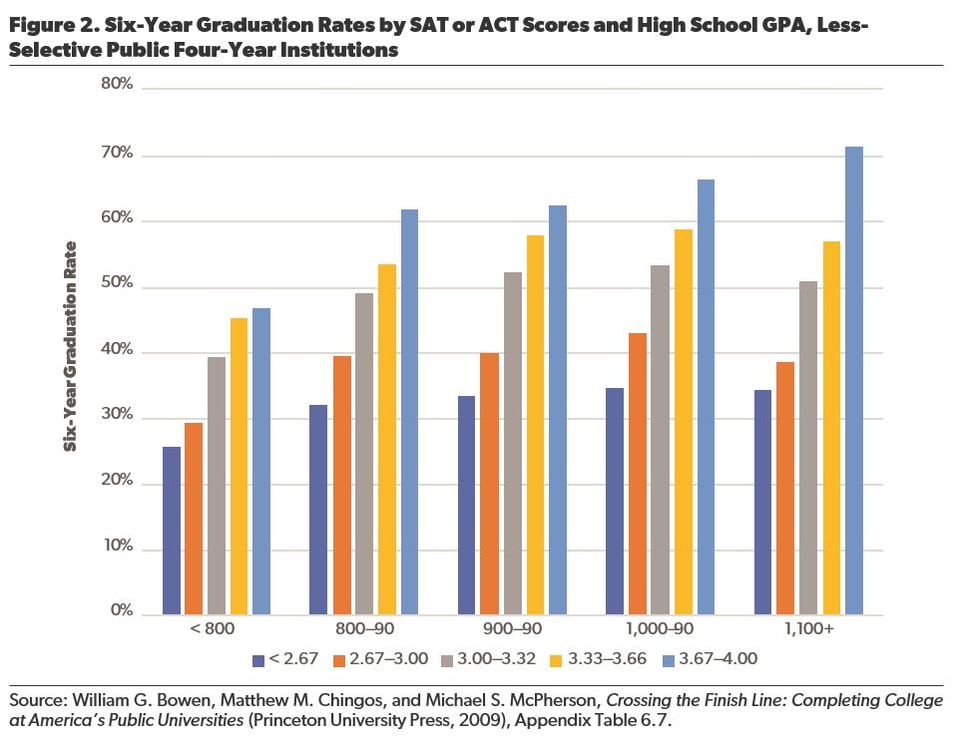

Both SAT or ACT scores and high school GPA are associated with the likelihood that students at four-year colleges earn a bachelor’s degree. But when considered together, the predictive power of high school GPA is much stronger. Figure 2 shows that, among students with similar SAT or ACT scores, those with higher high school GPAs are much more likely to graduate. But among students with similar high school GPAs, no strong relationship exists between SAT or ACT scores and graduation rates (except that those who score below 800 are noticeably less likely to complete college).

This makes sense given that earning good grades requires consistent behaviors over time—showing up to class and participating, turning in assignments, taking quizzes, etc.—whereas students could in theory do well on a test even if they do not have the motivation and perseverance needed to achieve good grades. It seems likely that the kinds of habits high school grades capture are more relevant for success in college than a score from a single test.

The data in Figure 2 are for a group of less-selective (i.e., average SAT or ACT score below 1150) public colleges and universities in four US states, but the much stronger predictive power of high school GPA relative to SAT or ACT scores holds across a wide range of public institutions.4 And the relatively weak predictive power of SAT or ACT scores vanishes entirely once the student’s high school is taken into account, suggesting that the test scores serve partly as a proxy for high school quality.

Why do test scores so strongly predict bachelor’s degree attainment on their own (see Figure 1) but not graduation rates once high school GPA is taken into account? Part of the reason is that students with higher test scores are both more likely to enroll in any college and to enroll in more selective colleges (presumably in part because of the use of SAT or ACT scores in the admissions process).5

In sum, students with higher test scores are more likely to graduate college in part because they will likely attend better-resourced higher education institutions that graduate more of their students. For an example of this, see Table 1 in the related paper by Mark Schneider and Kim Clark on institutional effects, where the completion rate is over 90 percent at some of the most selective universities.6 These students, however, are not much more likely to graduate than those with lower test scores (but with similar high school grades) on the same campus.

The relative strength of SAT or ACT scores and high school GPA to predict college completion depends on various analytic choices. But one finding is consistent: These relationships are generally quite smooth. In other words, there does not appear to be a level of grades or test scores at which a student’s chances of finishing college jump dramatically.

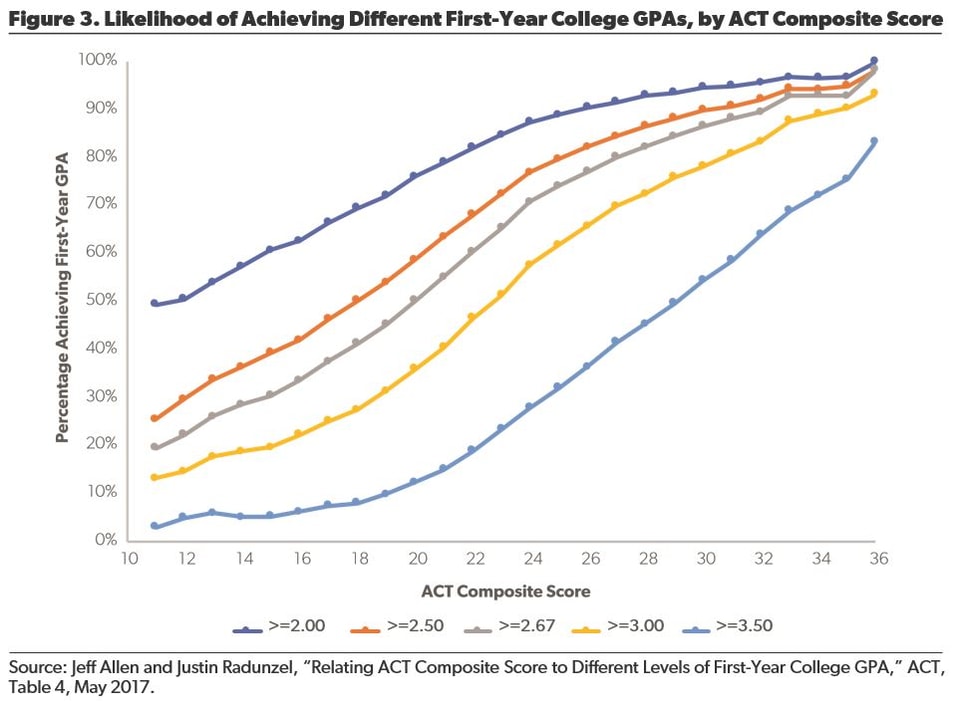

This is apparent in Figure 2, as well as in Figure 3, which shows the relationship between ACT composite scores and the likelihood that a student will achieve a target first-year GPA in college. College GPA is the outcome the College Board and ACT typically use to assess the validity of their tests, and SAT or ACT scores are more predictive of college grades than they are of college graduation rates.7

The smoothness of these relationships is important because it runs counter to efforts to identify “benchmarks” that indicate college readiness. For example, the ACT defines college readiness benchmarks as those that predict a 50 percent chance of earning at least a B average in the first year of college.8 In Figure 3, this threshold is reached at an ACT score of 23.

But there is no evidence that reaching this threshold has any real meaning relative to other arbitrarily selected thresholds. For example, a score increase from 21 to 23 is associated with an increase in the chance of earning a B average of about 10 percentage points—but so is a score increase from 23 to 25.

Benchmarks may be useful for some purposes, but they do not provide much actionable information for policymakers or educators because college readiness is a continuum, not a state of being. To borrow a medical analogy, measures of college readiness are more like the body mass index than a pregnancy test.

The SAT and ACT are not the only tests aimed at measuring college readiness. State tests increasingly have this goal, including those developed by consortia of states to assess student performance against the Common Core State Standards—namely, the Partnership for Assessment of Readiness for College and Careers (PARCC) and the Smarter Balanced Assessment Consortium (SBAC). The available evidence indicates that tests are generally similar to college admissions tests in how strongly they predict college grades.9 There has been one study of a test from each of the Common Core consortia. The study of PARCC tests finds similar predictive power relative to a state test—that is, the Massachusetts Comprehensive Assessment System.10 The study of SBAC tests finds similar predictive power relative to the SAT and finds that both tests are weaker predictors than high school GPA is.11

The available evidence indicates that a test is a test is a test when it comes to predicting success in college.12 But this conclusion has to be qualified by the fact that most of this research focuses on college grades (usually in the first year) and not on college completion, and some evidence shows that scores on Advanced Placement and SAT subject exams can better predict college completion than SAT or ACT scores can.13 More work is needed to assess how strongly the new generation of college readiness measures, especially those tied to state standards, predict which students are most likely to complete a college degree—not to mention how to help those who may be less likely to succeed.

High School Coursework

Students’ readiness for college is assessed by not just summary measures such as test scores and grades but also the content and rigor of their high school coursework. Jacob Jackson and Michael Kurlaender review a sizable body of research documenting that students who take more rigorous high school courses are more likely to succeed in college.14 For example, Clifford Adelman identifies that the highest level of mathematics taken is an important predictor of degree attainment.15

Jackson and Kurlaender highlight multiple explanations for the relationship between rigorous high school coursework and future success, including the richer curriculum, higher-ability peer groups, and the likelihood that better teachers are assigned to advanced courses. But they also caution that the relationship between course-taking and future success may be exaggerated by unmeasured traits of students who take these courses, such as their ability, motivation, and work ethic. Family characteristics such as parental education and income likely play a role as well.

Careful attempts to isolate the causal effect of taking more rigorous high school courses suggest that selection bias is unlikely to fully account for the relationship between courses taken and future success. Mark Long and colleagues find that students who take rigorous courses in high school tend to score higher on tests in high school and are more likely to graduate from high school and enroll in college, compared to students with similar eighth-grade test scores at the same school who did not take such rigorous courses.16

Kalena Cortes and colleagues evaluate a Chicago Public Schools policy that assigned students with below-median math performances in eighth grade to a “double dose” of algebra (two instructional periods instead of one) in ninth grade.17 To isolate the effect of the policy, they compare students who scored just below the participation threshold (the median math score)—and are therefore eligible for the intervention—to students who just missed being eligible. These groups of students were nearly identical except that one had a double dose of algebra and the other had only a single dose. The researchers find that the double dose of algebra increased test scores, high school graduation rates, and college enrollment rates.

Unfortunately, few studies have measured whether interventions aimed at boosting college readiness in high school affect graduation from college. This presumably stems from the long period of time that must pass before college completion can be accurately measured, which would also mean that such information would be dated by the time it is available. On the other hand, it stands to reason that interventions that increase a series of earlier outcomes (test scores, high school graduation, and college enrollment) will also likely increase degree attainment. But this is not always the case. The evidence reviewed above showed that test scores are much weaker predictors of college completion than they are of first-year college grades. And the double-dose algebra study found the opposite: The impact of the intervention on long-term outcomes was much greater than would have been expected based on its impact on test scores.18

It is important to remember that an intervention that increases both college enrollment and degree attainment may not appear to increase graduation rates—that is, the completion rates of students who enroll in college. This would be the case if the enrollment effect pushes into college students who have below-average chances of finishing degrees and would thus bring down the average completion rate, even though they are much more likely to earn a degree than if they never went to college. Increasing the pool of college-goers with students who may not have attended college without an intervention may increase the number of students completing a degree without increasing the proportion of entrants who finish.

Interventions That Boost Readiness

College readiness indicators vary not only in how much they predict college success but also in their suitability for intervention. Evidence about the effects of taking particular courses is one of the most relevant factors for policymakers and practitioners, as the obvious implication is to encourage more students to take courses shown to improve enrollment and success in college.

A positive association between taking a certain course and later success may not be causal, so this point should not be taken too far. And of course there are always risks that a scaled-up effort may yield different effects than an initial pilot. But when there is strong evidence, such as the study of double-dose algebra previously discussed, it can offer relatively clear guidance for both policy and practice.

Test scores are something of a counterexample. It is easy to come up with extreme examples of interventions, such as test preparation or even cheating, that are likely to boost test scores but not college completion. At the same time, they can be a useful barometer for assessing the effects of other interventions. More research is needed that specifically links performance on different kinds of tests to college completion, but evidence suggests that tests tied to learning specific content (for example, in a course) are more closely linked to college completion than general tests of ability (even if they are not marketed as such), such as the ACT or SAT.19

High school grades share some of the same properties, in that inflating students’ grades is unlikely to increase college completion. And enrolling students in easier courses could potentially both increase their GPA and harm their chances of succeeding in college. But grades have an important advantage over test scores in that they measure more than just what students can demonstrate they know in a given moment. Grades also capture whether a student shows up to class each day, consistently turns in assignments on time, and engages in other behaviors that are likely useful in a range of settings, including success in college.

The bottom line is that high school grades represent a more useful conceptual frame for college readiness than test scores do. Increasing how much students learn in their high school courses should improve their grades. And the interventions and strategies used to improve their grades might also improve college success independent of learning material specific to a given course. We would also expect those improvements to appear on standardized tests related to the course material, but the test would likely miss the broader effects that are not specific to the course content.

Tests are still a useful tool for policymakers seeking to create incentives for success in high school. Although holding students, teachers, or schools accountable for achieving certain grades in key courses would almost surely lead schools to push students into easier courses and toward inflating their grades, the outcome would not be so different from that of schools that have passed students (including all the way through graduation) even when they are routinely not showing up to school, as was recently highlighted in Washington, DC.20 Incorporating scores on end-of-course tests into measuring school performance would be one strategy for mitigating such unintended consequences.

Recommendations

Increasing the academic preparedness of students before they arrive on college campuses holds significant promise for improving postsecondary educational attainment in the United States. And efforts targeted toward groups of students who have historically arrived at college less well prepared have the potential to narrow the troubling disparities in educational attainment that persist along lines of race and class.

Ensuring that efforts to improve college readiness do more good than harm requires paying careful attention to the incentives they create for students, secondary schools, and postsecondary institutions. Unintended consequences to avoid include further inflating grades, granting high school diplomas to students who are not well prepared, and discouraging students who would benefit from college from ever enrolling. Instead, policymakers should work to increase the number of students who enroll in high-quality postsecondary programs that are a good fit for them, which may be a four-year degree, local community college, or vocational school.

Research that is rigorous and relevant should inform policy, but the existing research base is far too limited. Simply calling for more research that tracks students from high school through college completion will not do because this type of work, although potentially informative, is outdated by the time it is available or useful. This is a constraint imposed by the laws of space and time, which education researchers will not likely bend anytime soon.

An imperfect but potentially valuable solution is to conduct research that links measures at different points in time, such as test scores and grades in high school to high school graduation, college enrollment, performance in college, and graduation from college. For example, the measures of academic performance in high school that more strongly predict success in college could then be used to forecast the longer-term effects of an intervention.

In the meantime, the available evidence suggests at least three lessons for policymakers and practitioners. First, government-driven assessment of high school quality should expand beyond the single year of test scores and graduation rates that are currently required by the federal Every Student Succeeds Act. Graduation rates are ripe for gaming, and performance on a single test provides little actionable information, especially in high schools, where instruction is largely course specific. States could expand their assessment of high school performance to include the kinds of courses taken and their difficulty, as well as student performance in those courses (measured, at least in part, by performance on end-of-course exams that cannot be easily gamed by the school).

Second, K–12 schools and districts should work to increase the number of students who take rigorous courses, which they could be encouraged to do by including course-taking measures in state accountability systems. There are obvious downsides to taking this idea too far—for example, students who cannot do basic math clearly will not be well served by a calculus course. But there is solid evidence of the benefits of certain types of courses, such as additional instruction in algebra for students with below-average math performance. Compelling evidence also shows that providing access to advanced coursework by screening all students—rather than just admitting those who volunteer to take, or are enrolled by their parents in, more rigorous classes—can identify and prepare more students with the potential to succeed, especially students of color.21

Finally, researchers and educators should collaborate on pilot interventions aimed at improving success in high school courses. These could be focused on content or on more general strategies aimed at helping students learn how to learn. Evaluating the impact of these trial efforts both in and beyond the course is necessary to identify and improve on successes and to learn from failures. For example, intensive tutoring targeted to academically struggling students has been successful in Chicago and could be piloted in other contexts in ways conducive to evaluation, such as by randomly selecting some schools to participate, using the other schools as a comparison group, and then expanding the intervention district-wide if it is successful.22

Academics are not all that matter for college completion, and clearly policy efforts can affect other malleable student characteristics linked to college success, such as through extracurricular activities, mentoring programs, and efforts to increase school safety. But developing clear, scientifically based measures of college readiness and making sensible use of them in policy and practice is one area that holds significant potential to increase students’ chances of earning a degree before they ever step foot on a college campus.

About the Author

Matthew M. Chingos is director of the Urban Institute’s Education Policy Program. He is also an executive editor of Education Next and coauthor of Game of Loans: The Rhetoric and Reality of Student Debt and Crossing the Finish Line: Completing College at America’s Public Universities.

© 2018 by the American Enterprise Institute and Third Way Institute. All rights reserved.

The American Enterprise Institute (AEI) and Third Way Institute are nonpartisan, not-for-profit, 501(c)(3) educational organizations. The views expressed in this paper are those of the author.

AEI does not take institutional positions on any issues.